Toggled notes, in case anyone wants to yell at me about:

Too much math? Too little math?

- I’ve kept just enough math to make the ideas make sense — no more, no less. If you're here to study, well… good luck. I’ll link some relevant resources I actually used while learning or writing this, in case you want to dive deeper.

Not a fan of how this looks?

- Look, I’m a developer who tried. I wrangled CSS until it kinda worked. If the fonts or layout are bothering you, here’s a Notion version of this post — clean, minimal, and easier on the eyes (or your judgment).

Double dash AI-generated?!

- Ah yes, the double dash — clear proof the robots are taking over. Please, listen to some Vogon poetry.

Imagine trying to describe a photograph to someone over the phone without being able to see it yourself. That’s essentially the challenge computer scientists faced in the 1950s when they first attempted to teach machines to ‘see’.

What is computer vision, anyway?

If you’re new to the field, you might assume it’s just deep learning (turtles?) all the way down — and you wouldn’t be alone. These days, deep learning is everywhere — plastered across research papers, dominating Twitter hot takes (I refuse to call it X), and clogging up your LinkedIn feed thanks to self-declared "AI thought leaders" who discovered convolutional layers last Tuesday. It’s been hyped as the second coming of intelligence, capable of solving everything from image recognition to world peace.

But here’s the thing: computer vision wasn’t always about tossing giant neural networks at images and hoping for the best. Back before GPUs became altars for worship (and NVIDIA’s revenue rivaled the GDP of entire countries), the field was built on clever algorithms and hand-crafted features — designed by researchers who actually had to think outside the box, not just feed another prompt into one.

In this post, we’re rewinding to the days before deep learning — before AlexNet, before pixels had “brains,” and before every vision problem was solved with a 100B-parameter model and a sprinkle of hype. Back then, progress came from math, and insights had to be earned. This is the story of how computers first learned to see — and how those early hacks paved the way for the AI overload we live with today.

The biological foundations of vision

Humans have been fascinated by vision for centuries, long before the rise of computer algorithms. Thinkers like Leonardo da Vinci and Isaac Newton explored the optics of the human eye and the nature of color, laying early foundations. But it was Hermann von Helmholtz in the 19th century who is often credited with launching the first modern study of visual perception. By examining the eye, he realized it couldn’t possibly deliver a high-resolution image on its own; the raw input was simply too limited. Helmholtz proposed that vision wasn’t just a matter of light hitting the retina, but a process of unconscious inference: the brain fills in the gaps, drawing on prior knowledge and experience to make educated guesses about the world.

The next major breakthrough in understanding vision came from the work of Hubel and Wiesel in the 1950s and ‘60s. They discovered that visual processing happens in layers, with each layer adding structure and meaning to the raw input from the eyes. While light enters through the cornea and lens to project an image onto the retina ( a thin sheet of neural tissue lined with photoreceptors), the specialized cells translate light into electrical signals, which are then sent down the optic nerve to the brain’s visual cortex. There, a hierarchical network of neurons decodes the scene, gradually extracting features like edges, textures, and shapes; turning photons into perception.

This brings up a question.

Isn’t Computer Vision just Image Processing?

Not quite. Digital Image Processing (DIP) operates at a lower level and focuses on manipulating pixel data to enhance or transform images without interpreting their content. Noise reduction, edge detection, contrast adjustment, and sharpening — all of these techniques are essentially tasks that improve visual quality and prepare images for further analysis. For instance, medical imaging uses DIP to clarify MRI and CT scans, while Photoshop applies filters to adjust brightness, color or create posters for the Titanic movie sequel (r/photoshopbattles is a fun place). Crucially, DIP outputs modified images, not insights.

Computer Vision (CV) focuses on enabling machines to interpret and understand visual content at a semantic level, by aiming to replicate human visual cognition. Extracting meaningful information from images or videos — such as identifying objects, recognizing faces, or analyzing scenes — and using that understanding to make decisions is the key tenet of computer vision. Level-4 autonomous vehicles like Waymo and Zoox use CV combined with other systems to detect pedestrians, interpret traffic signs and drive around city blocks with little to no human supervision. Deep learning-based CV systems learn patterns and context from data, moving beyond raw pixels to high-level comprehension.

While distinct, DIP can be considered a subset of CV and they often collaborate. Image processing often serves as a preprocessing step for computer vision: enhancing input data (e.g. removing water from underwater images) can significantly improve task accuracy. Conversely, CV can guide DIP — such as using object detection to apply selective enhancements to specific regions of an image.

To put it in simple terms:

Digital Image Processing answers: “How can I improve this image?”

Computer Vision answers: “What have I learnt from this image?”

The Early Experiments (1950s-60s)

Classic computer vision took its first steps in the 1950s, with the first digital scanner built at the National Institute of Standards and Technology, by Russell Kirsch and his team. The scanner used a photo-multiplier tube to detect light at a given point and produced an amplified signal that a computer could read and store into memory.

Around the same time, researchers began exploring how machines might interpret that data, with early efforts focused on recognizing simple shapes and patterns. Among the pioneers was Frank Rosenblatt, who introduced the single-layer perceptron — a primitive but groundbreaking attempt at mimicking the brain’s ability to recognize patterns. It was one of the first neural networks, and while limited, it sparked a wave of interest in teaching machines to “see”. However, Rosenblatt and his contemporaries were not very successful in their attempts to train multilayer perceptrons, and Minsky and Papert’s 1969 book effectively killed interest in perceptrons, until its resurgence in the ‘80s with the development of backpropagation.

A widely circulated myth during this time is that Minsky hired a student to develop computer vision as an undergraduate summer project, by hooking up a camera to a computer. In fact, Minsky and Papert were working on existing research, particularly the research of Larry Roberts (also known for helping create the Internet!). It was this context that led to the ambitious Summer Vision project, in a memo from July 1966 where Papert explained the goal of the project being to successfully divide, describe and identify backgrounds and objects in images. Needless to say, it was not a success at the outset, but the project did serve as the foundation for decades of experimentation for mathematicians and computer scientists.

More importantly, Larry Robert’s PhD thesis laid the foundation for object recognition and scene reconstruction, where he discussed the possibilities of extracting 3D geometrical information from 2D images. His work demonstrated how algorithms could identify edges, corners and surfaces in images of simple block shapes, shifting the focus from hardware to software-based interpretation of visual data.

The Algorithmic Foundations (1970s-80s)

The following decade saw the development of several key algorithms and techniques in computer vision.

1. Edge Detection

Robert’s pioneering work demonstrated the use of algorithms to identify edges in images using gradient calculations. This led to the development of three foundational algorithms:

a. Sobel Filter

This filter introduced a simple, yet effective method for detecting edges by convolving a image with discrete differentiation kernels. The Sobel filter approximates the first-order derivative in the horizontal and vertical directions using two kernels:

The resulting gradient magnitude, highlights edges based on sharp changes in intensity. It’s built-in smoothing effect (due to weighting of the central row/column) gives it moderate noise resistance, making it popular in early image processing applications like character recognition and medical imaging.

can be visualized as a grayscale image, where the intensity of each pixel represents the strength of the gradient at that point. High gradient magnitude values usually indicate edges in an image. However, in areas with high noise levels, the gradient magnitude can be high, even if there is no meaningful change in intensity.

b. Canny Edge Detector

This algorithm formalized the criteria for an “optimal” edge detector: good detection, good localization, and minimal response. John Canny introduced a multi-stage algorithm (in 1986) that:

- Applies Gaussian filtering to reduce noise.

- Computes gradient magnitude/direction (using derivatives).

- Uses non-maximum suppression to thin edges (retains local minima only).

- Employs double thresholding along with edge tracking by hysteresis to distinguish between strong and weak edges, while connecting weak edges that are likely part of the same boundary.

This comprehensive approach made the Canny detector particularly effective in scenarios such as object contouring, where precision and reliability were critical. It was a staple for feature extraction in early computer vision pipelines.

c. Laplacian of Gaussian (LoG)

LoG builds on the idea of second-order derivative analysis to detect zero-crossing, which often correspond to edges. It combines two operations:

- Gaussian smoothing to reduce high-frequency noise.

- Laplacian filtering, computed as , to detect regions where intensity changes sharply in multiple directions.

The resulting response identifies edge-like structures where the Laplacian crosses zero. LoG played a fundamental role in blob detection and formed a precursor to advanced methods like the Difference of Gaussians (used in SIFT).

Despite their effectiveness, classical edge detectors relied on hand-crafted filters and assumed relatively clean, uniform intensity changes. In real-world settings with textured backgrounds, occlusion, or variable lighting, these assumptions often broke down.

2. Feature Extraction

Alongside edge detection, researchers explored methods to extract distinctive local features — important for tasks like object recognition, stereo matching, and image stitching. The goal was to develop robust, repeatable, and invariant detectors; capable of identifying the same feature across changes in scale, orientation, illumination, and viewpoint:

Corner and Blob Detection

Early methods focused on detecting points with significant intensity variation in multiple directions; typically corners or blobs:

- Moravec Corner Detector (1977): One of the first algorithms to identify points of interest by measuring local intensity variation in multiple directions. However, it was not rotation-invariant and suffered heavily from noise sensitivity, often leading to false corner detections.

- Harris Corner Detector (1988): Built upon Moravec’s idea, the Harris corner detector introduced a mathematically grounded approach based on the structure tensor (second-moment matrix). A large positive R (Harris response) indicates a corner. The Harris detector offers improved robustness to noise, rotation-invariance, and partial invariance to affine intensity changes. However, it does not address scale invariance, limiting its effectiveness under significant zoom or object size variation.

Scale-Invariant Feature Transform (SIFT) Precursors:

While SIFT was formally introduced by David Lowe in 1999, its principles originated in 1980s work on describing keypoints invariant to changes in scale, rotation, and illumination:

- Rotation and Scale-Invariant Keypoints: Researchers explored how to identify salient image points that remain stable under geometric transformations. This included efforts to develop detectors invariant not only to image translation, but also to rotation and object size variations.

- Multi-scale representations: The theory of scale-space, introduced by Witkin and Koenderink, attempting to analyze images at multiple levels of Gaussian smoothing. This allowed features to be detected consistently at different scales, enabling robustness to zoom and resolution changes.

- Blob Detection Using Second-Order Derivatives: The use of Laplacian of Gaussian (LoG) filters to detect blobs was a crucial step toward scale invariance. Later, Difference of Gaussian (DoG) filters were used as efficient approximations, a concept integral to SIFT’s keypoint detection.

- Orientation Histograms for Rotation Invariance: Techniques for assigning a dominant orientation to each keypoint based on local gradient directions were developed, enabling the descriptor to be rotation invariant. Early descriptors leveraged histograms of gradient orientations to capture the local image structure.

These techniques laid the groundwork for robust matching across different views or lighting conditions, though full scale invariance remained elusive until SIFT.

3. Shape detection: Hough Transform

Originally patented in 1962 for analyzing bubble chamber photographs and later generalized by Duda and Hart in 1972, the Hough transform marked a pivotal advance in the quest to move from raw pixel data to meaningful shape recognition. Their technique enables detection of simple geometric shapes — even when shapes are noisy, fragmented, or partially occluded — by reformulating the problem as a voting process in parameter space rather than searching directly in pixel space.

For example, to find lines, each edge point in the image is mapped to all possible lines that could pass through it (represented by the equation , where is the distance from the origin and is the angle of the line). Every edge point “votes” for all the (,) combinations that can explain its position; these votes are collected in a discretized accumulator array and peaks in this array reveal the most likely geometric structures in an image. This exemplified the transition from detecting local features (edges, corners) to assembling them into coherent global structures

This makes the Hough transform robust to noise and missing data; shapes are detected even in the presence of clutter, noise, or missing segments, making it invaluable in industrial and robotics applications, where detecting lines, circles, or other basic shapes with precision is critical.

4. Stereo Vision & 3D Reconstruction

One of the early challenges in computer vision was enabling machines to perceive depth — reconstructing a three-dimensional understanding of the world from two-dimensional images. Larry Robert’s PhD thesis described how to infer 3D information about solid objects from 2D images by reducing scenes to simple geometric shapes. Inspired by human binocular vision, stereo vision aims to estimate depth by comparing two images of the same scene taken from slightly different viewpoints, analogous to our left and right eyes.

The key idea is to find corresponding points in both images and compute their disparity (difference in horizontal position between matching points). Greater disparity indicates closer objects. Early methods like block matching and correlation-based approaches compared small patches across the image pair to compute these disparities.

Advanced techniques such as Lucas-Kanade leverage image gradients to track flow (how features shift between views). These methods allowed for more accurate correspondence estimation, especially in regions with continuous intensity changes.

Once disparities are established, triangulation is applied using geometric principles. If the baseline (distance between cameras) and intrinsic camera parameters are known, the 3D position of each point in the scene can be recovered (known as stereo reconstruction), resulting in a depth map or point cloud representing the scene’s geometry.

Stereo vision is foundational for applications like autonomous driving, robot navigation, and augmented reality, where understanding depth is critical. It also paved the way for modern depth sensors, including structured light and time-of-flight sensors used in smartphones and robotics.

These developments were a major leap forward, moving machines from flat image understanding to rich spatial perception.

5. Neocognitron

Introduced by Kunihiko Fukushima in 1980, the Neocognitron marked a significant milestone in bio-inspired computer vision. Drawing on the hierarchical processing principles identified by Hubel and Wiesel — visual understanding begins with detection of simple features like edges — the Neocognitron implemented a layered architecture. Early layers extracted simple features (edges, corners, etc.), while deeper layers progressively combined these to recognize more complex shapes and objects.

A key feature of the Neocognitron was its ability to achieve shift invariance, possible through alternating two types of layers:

- S-cells (simple cells): Detect localized features at specific positions.

- C-cells (complex cells): Introduce tolerance to small translations and distortions by pooling responses from neighboring S-cells.

Sounds familiar? That’s ‘cause the Neocognitron directly inspired the development of the modern convolutional neural network. Today’s deep learning models for image recognition owe much of their architecture to Fukushima’s pioneering work.

6. Scale-Invariant Feature Transform (SIFT)

Introduced by David Lowe in 1999, the Scale-Invariant Feature Transform (SIFT) became one of the most influential breakthroughs in classic computer vision. Designed to detect and describe local image features (keypoints), SIFT stood out for its robustness to scale, rotation, and even moderate changes in viewpoint and illumination.

SIFT works by constructing a scale-space representation of the input image, generated by progressive blurring using Gaussian filters. Local extrema in this multi-scale space, which are identified using Difference of Gaussians, are considered candidate keypoints. This allows SIFT to detect scale-invariant features.

For each keypoint, an orientation is assigned based on local gradient directions, providing rotation invariance. The region around the keypoint is encoded into a 128-D vector descriptor, built from histograms of local gradient orientations. This descriptor is both distinct and compact, allowing for reliable matching across images.

SIFT features can be reliably matched across images, regardless of object occlusion, scaling or orientation; this made SIFT a go-to method for a variety of tasks including object recognition, image stitching, robotic mapping & navigation, and video tracking. Despite its computational complexity and earlier patent restrictions, SIFT remains a cornerstone of classic computer vision and inspired many subsequent algorithms, including SURF and ORB.

Practical Applications (1990s-2000s)

As computer vision matured through the latter decades of the 20th century, the focus shifted from foundational algorithms to real-world applications. Computer vision moved out of the lab and into everyday technology, industry, and even entertainment.

Facial Recognition and Biometrics

One of the most impactful breakthroughs was in facial recognition. Early recognition systems used statistical methods like Eigenfaces, which represented faces as combinations of key features extracted from a set of training images. This approach allowed computers to compare and identify faces with surprising accuracy for the time, laying the groundwork for modern biometric security systems.

Real-Time Video Processing

With advances in hardware and algorithms, computers could finally process images and video fast enough for real-time applications:

- Surveillance systems that could automatically detect and track people or vehicles.

- Gesture recognition for early human-computer interaction, such as in gaming and touchless interfaces.

- Traffic monitoring and license plate recognition, which became a staple in smart city infrastructure.

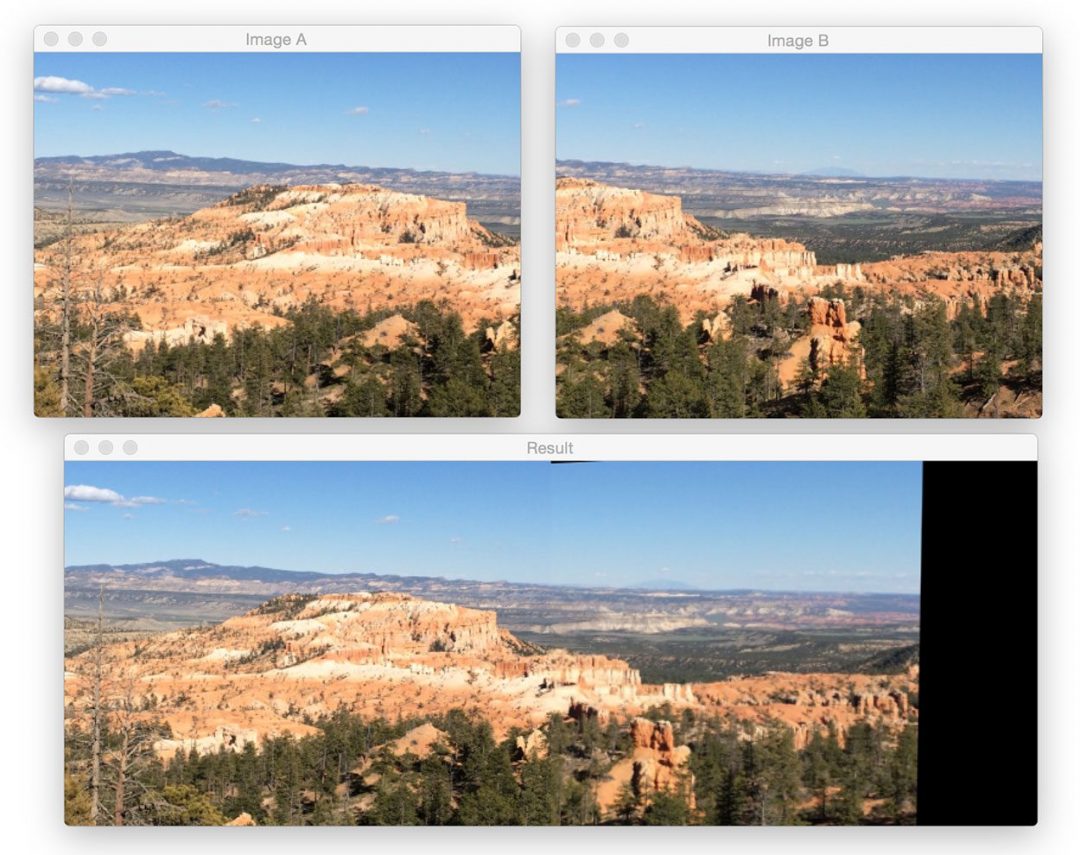

3D Reconstruction and Camera Calibration

Researchers also made significant progress in 3D reconstruction, with techniques like multi-view stereo and and structure-from-motion allowing computers to infer shape and position of objects in space. At the same time, camera calibration methods improved, making it possible to correct lens distortions and accurately map scene geometry.

Image Segmentation and Object Recognition

The ability to break down images into meaningful regions — image segmentation — became a cornerstone for tasks like medical imaging and autonomous navigation, with techniques like graph cuts and region growing allowing for precise separation of objects from their backgrounds. Template matching and early machine learning classifiers enabled object recognition in complex, cluttered scenes.

Integration with Computer Graphics

The line between computer vision and computer graphics began to blur, with applications like panoramic image stitching, image morphing, and augmented reality becoming possible. These advances laid the groundwork for today’s immersive technologies, from smartphone AR filters to virtual reality environments.

Transition to Machine Learning

As the 2000s progressed, computer vision began to outgrow its reliance on handcrafted features and rule-based algorithms. While mathematical filters and clever tricks could extract edges, corners, and blobs, these methods would often struggle with the complexity of real-world images; variations in lighting, background clutter, and object deformations frequently led to false positives and failure. It became increasingly clear that computers had to learn directly from data, rather than depend on designed heuristics.

This marked the rise of machine learning in computer vision; instead of manually designing every step, statistical models were trained on labeled images to recognize patterns and make predictions. ML classifiers such as Support Vector Machines (SVMs) and k-Nearest Neighbors (KNN) became the standard for tasks like object recognition and scene classification. When paired with robust feature descriptors like SIFT and Histogram of Oriented Gradients (HOG) — which distilled images into compact representations — these models significantly outperformed rule-based systems.

One of the most influential breakthroughs of this era was the Viola–Jones face detector. By combining simple Haar-like features with a cascade of boosted classifiers, it enabled real-time face detection on consumer hardware, powering cameras, phones, and surveillance systems. Similar pipelines were adapted for detecting pedestrians, vehicles, and other structured objects in complex scenes.

Despite their success, classic ML systems had limitations; they depended heavily on the quality of handcrafted features and more often than not required significant tuning for each new application, failing to generalize across domains. The need for end-to-end learning was becoming more apparent.

Conclusion

From tracing edges like a digital detective to matching blobs and corners with the enthusiasm of a puzzle-obsessed toddler, the early days of computer vision were nothing if not creative. These classic algorithms may seem quaint compared to today’s deep learning juggernauts, but they laid all the groundwork. And probably gave a few grad students some gray hairs along the way 🤷♂️

Next up: we trade in our handcrafted spectacles for neural networks and GPU-powered rocket boots. Get ready for the deep learning era, where computers finally get to flex their “visual cortex” and show us what real seeing looks like!

Resources

- Basics of Image Processing by Vincent Mazert

- Davide Scarramuza’s slides on Point Feature Detection and Matching

- Scale-Space Theory

- Kris Kitani's slides on Stereo Block Matching & Lucas-Kanade Flow

- Multi-view 3D Reconstruction

- https://homes.cs.washington.edu/~shapiro/EE596/notes/SIFT.pdf

- Tsai’s method for camera calibration